Basic Concepts Of K-NN Algorithm

It is probably, one of the simplest but strong supervised learning algorithms used for classification as well regression purposes. It is most commonly used to classify the data points that are separated into several classes, in order to make prediction for new sample data points. It is a non-parametric and lazy learning algorithm. It classifies the data points based on the similarity measure (e.g. distance measures, mostly Euclidean distance).

Principle: K- NN algorithm is based on the principle that, “the similar things exist closer to each other or Like things are near to each other.”

In this algorithm ‘K’ refers to the number of neighbors to consider for classification. It should be odd value. The value of ‘K’ must be selected carefully otherwise it may cause defects in our model. If the value of ‘K’ is small then it causes Low Bias, High variance i.e. over fitting of model. In the same way if ‘K’ is very large then it leads to High Bias, Low variance i.e. under fitting of model. There are many researches done on selection of right value of K, however in most of the cases taking ‘K’ = {square-root of (total number of data ‘n’)} gives pretty good result. If the value ‘K’ comes to be odd then it’s all right else we make it odd either by adding or subtracting 1 from it.

|

| Classification for k=3 |

It is based on the simple mathematics that we used in high school level to measure the distance between two data points in graph. Some of the distance measuring techniques (Formulae) that we can use for K-NN classification are:

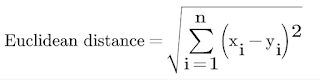

- Euclidean Distance:

|

| fig: Euclidean Distance |

- Manhattan Distance:

|

| fig: Manhattan Distance |

- Minkowski Distance:

|

| fig: Minkowski Distance |

4.

For p=1, we get Manhattan Distance and for p=2, we get Euclidean Distance. So, we can say that Minkowski distance is generalized form of Manhattan Distance, Euclidean Distance.

Among these methods, Euclidean Distance method is widely used.

Algorithm for K-NN:

1. Load the given data file into your program

2. Initialize the number of neighbor to be considered i.e. ‘K’ (must be odd).

3. Now for each tuples (entries or data point) in the data file we perform:

i. Calculate distance between the data point (tuple) to be classified and each data points in the given data file.

ii. Then add the distances corresponding to data points (data entries) in given data file (probably by adding column for distance).

iii. Sort the data in data file from smallest to largest (in ascending order) by the distances.

4. Pick the first K entries from the sorted collection of data.

5. Observe the labels of the selected K entries.

6. For classification, return the mode of the K labels and for regression, return the mean of K labels.

Decision Boundary for K-NN:

|

| Decision boundary for classification using K-NN algorithm source: stackoverflow.com |

Advantages and Disadvantage of K-NN:

Advantages:

1. The Kalgorithm is quiet easy and simple to implement as it does not include much of mathematics.

2. We can do solve both classification and regression problem using K-NN algorithm.

Disadvantages:

1. The algorithm becomes highly slower as the size of data increases.

Further reading- Implementation of KNN (from scratch in python)

Comments

Post a Comment